This newsletter is longer than usual because it explains what ChatGPT is all about and also includes my Q&A interview with the app itself as to what it can do, what it cannot do, and what are some red flags concerning its usage. My interview also demonstrates its remarkable ability to instantaneously summarize information in readable form, including the ability to write poetry about a topic — all within seconds of being asked to do so. But it also raises ethical and other questions surrounding this advanced form of artificial intelligence.

The media hubbub around ChatGPT, and artificial intelligence in general, requires contextualization. As a former investor in software development in Ukraine, I can attest to the fact that this is an astonishing breakthrough, but also that it won’t replace the world’s knowledge workers anytime soon. This is Siri on steroids, a “chatbot” that can comprehend human language questions but can respond with summaries containing sentences — or even in poetry. This has been accomplished by building a platform that has access to a massive data base of words and phrases, then has the operational ability to immediately stitch together a related answer or summation in sentences or paragraphs. ChatGPT is governed by a computerized process, or sets of rules called algorithms. This is also how Google can provide instant translations.

ChatGPT represents another step toward the reality that artificial intelligence will eventually become smarter than humans sometime this century. It will also displace many knowledge workers in decades to come, but for now it is a tool and enhancement for anyone curious about virtually anything as well as for journalists, researchers, teachers, students, and managers. But dangers loom, as with any new technology, and these are made apparent in the series of questions I put to ChatGPT. What follows are my screen-saved queries and its responses, which were immediate:

Then I tested it:

I asked it to give me an opinion about the Ukrainian-Russian conflict, keeping in mind that ChatGPT’s data base is only up to December 2021, before the invasion.

Finally, I asked it to write a poem and within a split second produced this:

It was gob-smacking, but the machine is only as smart, or up to date, as is the data base it relies on as well as the linguistic roadmap its human developers have uploaded. It cannot act or think on its own.

However, based on my years involved with software, Singularity University, and Silicon Valley denizens, it’s clear that ChatGPT is a major step toward reaching “Technological Singularity” — a hypothetical and unknown future point in time when artificial intelligence (or AI) becomes smarter than humans and when machines will cogitate or think. Some predict this will occur by 2029, in some primitive form, but that’s highly debatable. At the moment, however, ChatGPT, and its parent OpenAI (now bought by Microsoft for $19 billion) are leading a race against Google (with its version called LaMDA), Meta (with its Galactica) and dozens more software teams to build machines that comprehend, respond, and think.

Even at this stage, as I noted in my interview, there are red flags. Only a few weeks ago, Meta pulled Galactica after its launch because it was basing answers on racist and dangerous data. This is why on December 10, when ChatGPT was launched, its CEO Sam Altman issued this caveat: “ChatGPT is incredibly limited but good enough at some things to create a misleading impression of greatness. It’s a mistake to be relying on it for anything important right now. It’s a preview of progress; we have lots of work to do on robustness and truthfulness.”

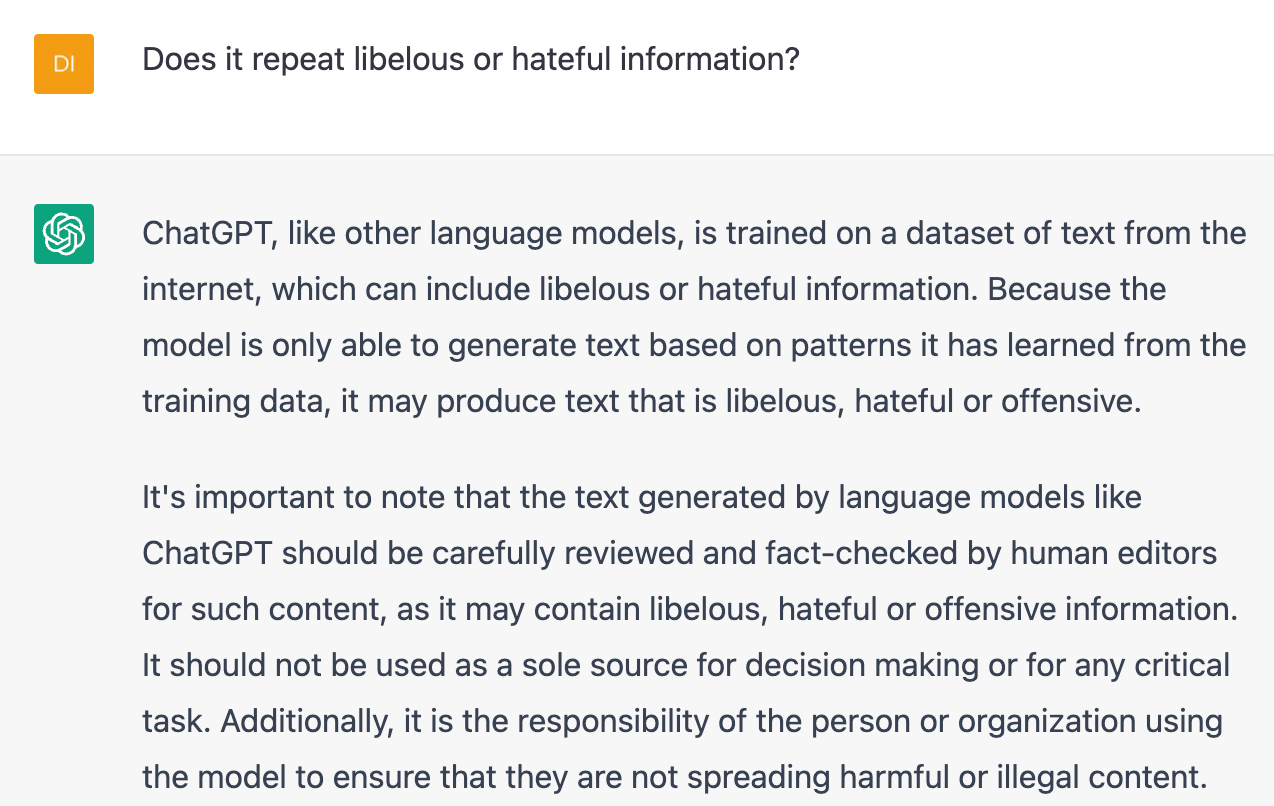

Technology is only as virtuous as are the humans who devise it and use it. It can be weaponized, or used to spread disinformation or hate or terrorism. It is not intrinsically accurate, legal, or moral which is why these new “generative” platforms must be held accountable. Google, for instance, accesses and stores the world’s largest information data base but depends on sources that are traceable — it doesn’t generate, summarize, extrapolate, or concoct its own information.

I think ChatGPT is an important breakthrough and will be invaluable because it can provide instant summaries or drafts or research notes for users. But its output must always be double-checked for accuracy. Even ChatGPT said so.

I had no idea this was going on! I mean, no specific knowledge about such software. I appreciated--and enjoyed--this article, Diane.

I expect that such software will continue the purveyance of propaganda, as lying is one of the major businesses in the human world.

Wouldn’t it be fun though if another form of software could be developed to identify lies, or at least to note the probability that some assertion, claim, analysis, conclusion, etc could be a lie?

There will have to be software to run on assertions that could be wrong, especially deliberately wrong, but I am certain this could never be foolproof.

My belief, therefore, about this kind of software, is that it is better suited to the dishonest purposes of people than the honest ones.

Thank you Diane! Interesting article as usual. I wonder if they would produce a Woke version of their database so that marginalized, underrepresented people (anyone whose not a heterosexual white male) will not be offended 😊