A “bot” named Raluca Zdru from ChatGPT emailed me after my “AI and Frankenstein” newsletter appeared recently. She (or he or it) provided me with results from a public survey taken by ChatGPT’s parent company OpenAI in response to an open letter from 1,000 experts asking that AI (Artificial Intelligence) development be paused for six months for safety reasons. The survey showed that two-thirds of polled respondents agreed that AI development should be stopped for now; 69 percent saw AI as negative for society; and 42 percent said they would vote for a government that paused AI developments. The expert letter had warned of “profound risks to society” and that AI labs were “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict or reliably control.” All this concern is well-placed. ChatGPT may not rule the world anytime soon, but a new study by Cornell University predicts that it will replace many jobs, and tasks currently performed by humans, and its results are chilling.

On March 17, Cornell released “GPTs: (Generative Pre-trained Transformers) An Early Look at the Labor Market Impact potential of Large Language Models (LLMs)”. Cornell’s conclusion was that “around 80 percent of the U.S. workforce could have at least 10 percent of their work tasks affected by the introduction of LLMs (Large Language Models) while approximately 19 percent of workers may see at least 50 percent of their tasks impacted. We do not make predictions about the development or adoption timeline of such LLMs.”

In simple English, ChatGPT is an LLM or a massive database of text data, involving billions of words, that can be referenced to generate human-like responses to your prompts. GPT stands for Generative Pre-Trained Transformers which are the platforms that have been “trained”, at huge expense, to comprehend and answer questions. ChatGPT can also write and perform music and its sister system, DALL-E3, can draw anything based on a natural language description. (I include two samples below).

Cornell’s study states that workers at all wage levels will be impacted, but higher-income jobs will be more at risk than lower-paying ones that involve physical labor or socialization. It added that workers involved in routine and repetitive tasks are at a higher risk but said “considering each job as a bundle of tasks, it would be rare to find any occupation for which AI tools could do nearly all of the work.”

Good to know, but lots of people will find, as time goes on, that so many of their tasks can be performed by GPTs that they will eventually find themselves out of a job. Cornell’s Table 11 lists 34 jobs that are “safe” or that have “no labeled exposed tasks” that are as yet replaceable by GPTs. Table 4 lists those that are at risk.

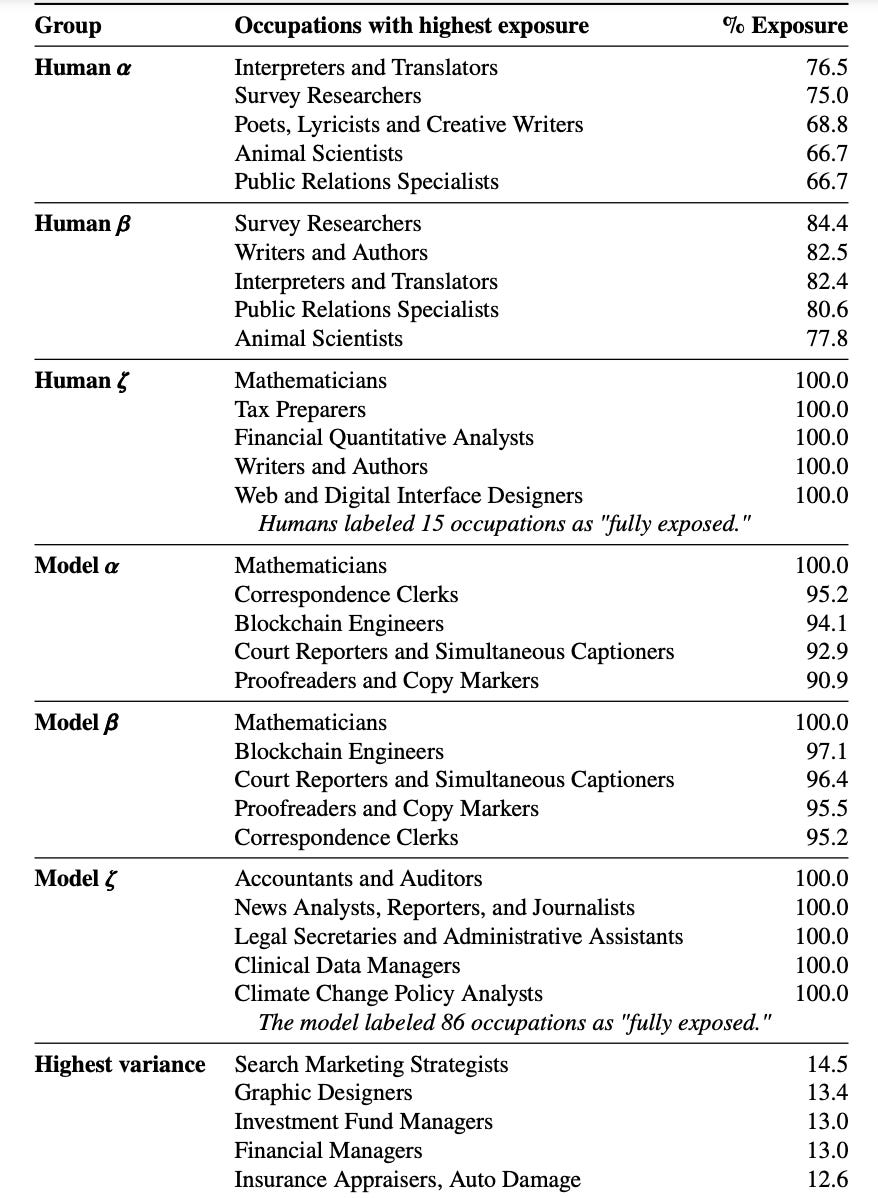

Those at risk:

Cornell’s quantification methods of “exposure” are complicated, as are the differences between labels such as “Human” or “Model” shown on Table 4, but all is explained in the report here. Simply put, Chart 4 estimates percentages of vulnerability among certain occupations based on differing levels of “model” or “human” interaction. The percentages quantify the proportion of their jobs that are “exposed”, or replaceable by GPTs.

It’s quite mind-bending and not definitive, but you get the idea. These LLMs or GPTs are going to revolutionize so-called “knowledge” work. Of course, this is nothing new. The invention of desktop publishing (that eliminated typesetting, presses, truck deliveries, and newsrooms) or CAD CAM or computer-aided design and manufacturing software have transformed or eliminated many occupations. But the “adoption” of job-robbing skills will vary and is difficult to forecast, as the study noted. For instance, self-driving cars and trucks were slated to replace drivers five years ago and haven’t happened because of software difficulties, regulations, capital shortages, insurance issues, infrastructure obstacles, ethical concerns, politics, and other impediments.

What’s certain, however, is that GPTs will continue to evolve. They began as “primitive” versions that could translate, transcribe text from speech, generate images from captions, or spell and grammar check. But years of human feedback, and billions of dollars, have been invested to train their Large Language Models or LLMs to discern user intent, be user-friendly, and practical. Their next iteration will be to train themselves and to program and control other digital tools such as search engines and APIs (a service that delivers a user request to a system and sends the system’s response back to a user).

“At their limit, LLMs may be capable of executing any task typically performed at a computer,” concluded the study. But there are limitations. As an export noted "I would classify ChatGPT as a smart high school freshman, and I don't want a professional to be a smart high school freshman doing triage at a hospital or intake at a doctor's office or a lawyer's office.”

The arts — from music and drawing — will also be drastically altered, or eliminated. “ChatGPT can generate melodies and chord progressions for a song or even generate entire compositions. Just remember that ChatGPT is a text-based model after all, which means you will need to input some pretty specific information on things like style, instrumentation, and tempo for the bot to work,” according to an article in Music Tech. Open AI’s other “system” — the incredible DALL-E2 — can add images to any text description. Here are two examples: I asked it to create a “Van Gogh version of robots taking over a field” and here’s what it produced within seconds:

Then I asked for “writer robots” and this was produced in seconds:

Yikes.

It’s daunting and disruption will be enormous which is why Cornell cautioned: “Our results examining worker exposure in the United States underscore the need for societal and policy preparedness to the potential economic disruption posed by LLMs and the complementary technologies that they spawn.”

Clearly, governments must get involved by imposing standards and safety measures for all GPTs, a requirement supported by the public survey that OpenAI itself commissioned and sent to me in an email. And they must do this now.

I really don’t see how “government must be involved” works..in any arms race, and this is just that, there is no stoppering of the bottle once the cork is loosened..the better question is around social justice and whether society shifts values from self interested greed wrapped up in religion and capitalism and balances things for everyone..this is stepping into what formerly was science fiction lore, and I don’t think most of us are equipped to predict or judge at this point..governments only react..so I fear things will inevitably go awry

it is inevitable and we all know it